# I asked Manus to demo my CLI with asciinema

Source: https://www.pgschema.com/blog/demo-with-manus-and-asciinema

export const AsciinemaPlayer = ({recordingId, title = "Terminal Recording", height}) =>

;

## Background

When demonstrating CLI tools like pgschema, [asciinema](https://asciinema.org) is a good way

to record the demo. I started by creating the recording manually.

However, even though I had prepared and practiced, the manual recording still included typos and pauses.

Not a smooth experience.

Then it occurred to me that I could use AI to script the demo.

## Process

[Manus](https://manus.im) is a general AI agent. I have been using it for various research tasks. I watch it spin up browsers, click links, and extract information. Scripting a terminal demo is a natural extension of its capabilities.

### 1st Recording: Too fast

I started by giving Manus this instruction.

```text

Read https://www.pgschema.com/.

Use asciinema to record a cast to demonstrate. Dump, edit, plan, apply workflow

```

Since pgschema is new, I needed to explicitly provide the URL. On the other hand, I assumed asciinema is well-known and doesn't need further introduction.

It was doing the right thing, but too fast—the demo finished in the blink of an eye. Well, it's AI after all.

### 2nd Recording: Add delays

I then gently asked Manus to simulate human typing behavior:

```text

You are doing it too fast. Please simulate human typing behavior.

This way the cast is more realistic and people can follow along.

```

There were some problems along the way—for example, at one point it didn't generate the cast file as instructed at all.

After some additional tweaking, it was finally on track.

### 3rd Recording: Polish

There were still some rough edges.

In particular, the demo added a `CREATE TABLE` change, but to showcase

the declarative approach, it would be better to demonstrate an `ALTER TABLE` change—essentially comparing

two `CREATE TABLE` statements and generating the `ALTER TABLE` migration.

This is my prompt:

```text

This is too short, we still need to:

1. After dumping, show the initial schema

2. Show exactly what we change in the schema

3. Apply, then confirm with a small pause so it's visible to the viewer

4. Dump and show the schema after the change

Also, to show the declarative approach, we should add a column instead of

creating a table. Creating a table can't demonstrate the difference of the

declarative approach.

```

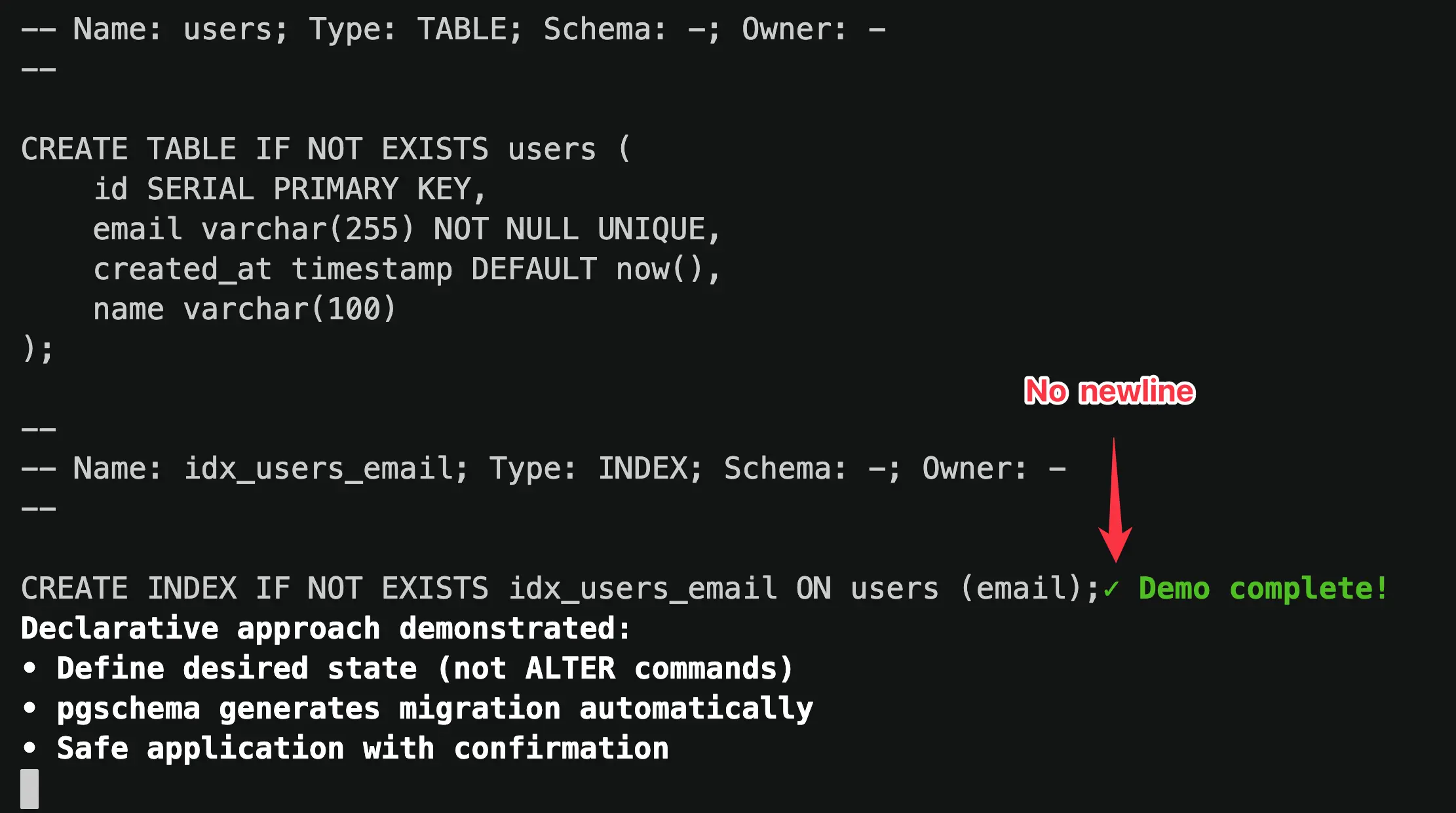

### Found a Bug

Now Manus generated a near-perfect demo with:

1. Clear workflow with all requested steps

2. Proper pauses

3. Color highlighting

4. Readable text

The imperfect part wasn't caused by Manus, but my pgschema.

The generated dump didn't end with a newline. This was obvious from the playback.

### Final Recording

After I fixed the bug, I asked Manus to generate the final version.

```text

This pgschema version has a bug where the dump doesn't end with a newline.

I have fixed the issue. Please force pull the latest pgschema and rerun

the same script.

```

This time, Manus delivered a clean demo as shown below.

## Reflection

I have made the entire Manus session public and you can check it [here](https://manus.im/share/8fEln1OzxpnsRSU1PnHweG?replay=1).

Manus didn't just execute commands—it interpreted my intent, made mistakes, course-corrected, and even helped me discover a bug in my own software.

Fittingly, the first feature request for pgschema was to make it more AI coding friendly:

An AI-built codebase, demoed by an AI agent, for an audience that will likely include developers working alongside AI. Perhaps this is just a glimpse of how software development is evolving.

# pgschema: Postgres Declarative Schema Migration, like Terraform

Source: https://www.pgschema.com/blog/pgschema-postgres-declarative-schema-migration-like-terraform

export const AsciinemaPlayer = ({recordingId, title = "Terminal Recording", height}) =>

;

## Introduction

While database migrations have traditionally been imperative (writing step-by-step migration scripts), declarative solutions are gaining traction.

`pgschema` differentiates itself from other declarative tools by:

* **Comprehensive Postgres Support**: Handles virtually all schema-level database objects - tables, indexes, views, functions, procedures, triggers, policies, types, and more. Thoroughly tested against Postgres versions 14 through 17.

* **Schema-Level Focus**: Designed for real-world Postgres usage patterns, from single-schema applications to multi-tenant architectures.

* **Terraform-Like Plan-Review-Apply Workflow**: Generate a detailed migration plan before execution, review changes in human-readable, SQL, or JSON formats, then apply with confidence. No surprises in production.

* **Concurrent Change Detection**: Built-in fingerprinting ensures the database hasn't changed between planning and execution.

* **Online DDL Support**: Automatically uses PostgreSQL's non-blocking strategies to minimize downtime during schema changes.

* **Adaptive Transaction**: Intelligently wraps migrations in transactions when possible, with automatic handling of operations like concurrent index creation that require special treatment.

* **Modular Schema Organization**: Supports multi-file schema definitions for better team collaboration and ownership.

* **Dependency Management**: Automatically resolves complex dependencies between database objects using topological sorting, ensuring operations execute in the correct order.

* **No Shadow Database Required**: Unlike other declarative tools, it works directly with your schema files and target database - no temporary databases, no extra infrastructure.

## Comprehensive Postgres Support

`pgschema` supports a wide range of [Postgres features](/syntax/create_table), including:

* Tables, columns, and constraints (primary keys, foreign keys, unique constraints, check constraints)

* Indexes (including partial, functional, and concurrent indexes)

* Views and materialized views

* Functions and stored procedures

* Custom types and domains

* Schemas and permissions

* Row-level security (RLS) policies

* Triggers and sequences

* Comments on database objects

The tool is thoroughly tested against [Postgres versions 14 through 17](https://github.com/pgschema/pgschema/blob/a41ffe8616f430ba36e6b39982c4455632a0dfa7/.github/workflows/release.yml#L44-L47)

with [extensive test suites](https://github.com/pgschema/pgschema/tree/main/testdata).

## Schema-Level Migration

Unlike many migration tools that operate at the database level, `pgschema` is designed to work at the **schema level**.

### Why Schema-Level?

**Single Schema Simplicity**: Most Postgres applications use only the default `public` schema. For these cases, schema-level operations eliminate unnecessary complexity while providing all the functionality needed for effective schema management.

**Multi-Tenant Architecture Support**: For applications using multiple schemas, the predominant pattern is **schema-per-tenant architecture** - where each customer or tenant gets their own schema within the same database. This schema-level approach enables [tenant schema reconciliation](/workflow/tenant-schema) - ensuring all tenant schemas stay in sync with the canonical schema definition.

### Schema-Agnostic Migrations

The tool intelligently handles schema qualifiers to create portable, schema-agnostic dumps and migrations:

```bash

# Dump from 'tenant_123' schema

pgschema dump \

--host localhost \

--user postgres \

--db myapp \

--schema tenant_123 \

> schema.sql

# Apply the same schema definition to 'tenant_456'

pgschema plan \

--host localhost \

--user postgres \

--db myapp \

--schema tenant_456 \

--file schema.sql

```

Here's what a schema-level dump looks like - notice how it creates **schema-agnostic SQL**:

```sql

--

-- pgschema database dump

--

--

-- Name: users; Type: TABLE; Schema: -; Owner: -

--

CREATE TABLE IF NOT EXISTS users (

id SERIAL PRIMARY KEY,

username varchar(100) NOT NULL,

email varchar(100) NOT NULL,

role public.user_role DEFAULT 'user',

status public.status DEFAULT 'active',

created_at timestamp DEFAULT now()

);

--

-- Name: posts; Type: TABLE; Schema: -; Owner: -

--

CREATE TABLE IF NOT EXISTS posts (

id SERIAL PRIMARY KEY,

user_id integer REFERENCES users(id),

title varchar(200) NOT NULL,

content text,

created_at timestamp DEFAULT now()

);

```

The tool automatically:

* **Strips schema qualifiers** from table and index names (notice `Schema: -` in comments)

* **Removes schema prefixes** from object references (`users` instead of `tenant_123.users`)

* **Preserves cross-schema type references** where needed (`public.user_role` for shared types)

* **Creates portable DDL** that can be applied to any target schema

## Declarative Workflow

This workflow mirrors Terraform's approach and fits [GitOps](/workflow/gitops) nicely:

### 1. Dump: Extract Current Schema

```bash

pgschema dump \

--host localhost \

--user postgres \

--db myapp \

> schema.sql

```

This extracts your current database schema into a clean, readable SQL file that represents your current state.

### 2. Edit: Define Desired State

Edit the dumped schema file to reflect your desired changes. Instead of writing `ALTER TABLE` statements, you write `CREATE TABLE` definitions to specify the desired end state.

### 3. Plan: Preview Changes

```bash

pgschema plan \

--host localhost \

--user postgres \

--db myapp \

--file schema.sql \

--output-human \

--output-json plan.json \

--output-sql plan.sql

```

This compares your desired schema (from the file) with the current database state and generates a migration plan. Here's where the tool differentiates from Terraform—the plan supports multiple output formats:

The human-readable format is perfect for reviewing changes during development

```

Plan: 1 to alter.

Summary by type:

tables: 1 to alter

Tables:

~ users

Transaction: true

DDL to be executed:

--------------------------------------------------

ALTER TABLE users ADD COLUMN name varchar(100);

```

The JSON format enables easy integration with CI/CD pipelines and approval workflows

```json

{

"version": "1.0.0",

"pgschema_version": "1.0.0",

"created_at": "2025-08-13T10:30:15+08:00",

"source_fingerprint": {

"hash": "7a8b9c1d2e3f4a5b6c7d8e9f0a1b2c3d4e5f6a7b8c9d0e1f2a3b4c5d6e7f8a9b"

},

"diffs": [

{

"sql": "ALTER TABLE users ADD COLUMN name varchar(100);",

"type": "table",

"operation": "alter",

"path": "public.users",

"source": {

"schema": "public",

"name": "users",

"type": "BASE_TABLE",

"columns": [

{

"name": "id",

"position": 1,

"data_type": "integer",

"is_nullable": false

},

{

"name": "email",

"position": 2,

"data_type": "character varying",

"character_maximum_length": 255,

"is_nullable": false

},

{

"name": "created_at",

"position": 3,

"data_type": "timestamp without time zone",

"is_nullable": true,

"column_default": "now()"

},

{

"name": "name",

"position": 4,

"data_type": "character varying",

"character_maximum_length": 100,

"is_nullable": true

}

],

"can_run_in_transaction": true

}

}

]

}

```

The SQL format shows the exact SQL to be executed, enabling custom tooling to inspect and analyze the changes

```sql

ALTER TABLE users ADD COLUMN name varchar(100);

```

### 4. Apply: Execute Changes

```bash

pgschema apply \

--plan plan.json \

--host localhost \

--user postgres \

--db myapp

```

This executes the planned changes safely, with built-in protections against concurrent schema modifications and proper transaction handling.

## Concurrent Change Detection

The separation between `plan` and `apply` phases creates a critical safety feature but also introduces a potential risk: **what if the database changes between planning and execution?** This time window could lead to applying outdated or conflicting migrations.

The tool solves this with **fingerprinting** - a cryptographic mechanism that ensures the exact database state you planned against is the same state you're applying changes to.

### How Fingerprinting Works

**During Plan Generation**: When you run `pgschema plan`, it calculates a cryptographic fingerprint of the current database schema state and embeds it in the plan file:

```json

{

"version": "1.0.0",

"pgschema_version": "1.0.0",

"created_at": "2025-08-12T17:44:43+08:00",

"source_fingerprint": {

"hash": "965b1131737c955e24c7f827c55bd78e4cb49a75adfd04229e0ba297376f5085"

},

"diffs": [...]

}

```

**During Apply Execution**: Before executing any changes, `pgschema apply` recalculates the current database fingerprint and compares it with the stored fingerprint from the plan.

### Safety in Action

This fingerprinting prevents dangerous scenarios:

```bash

# Team member A generates plan

pgschema plan \

--host prod.db.com \

--db myapp \

--user postgres \

--file schema.sql \

--output-json plan.json

# Time passes... Team member B makes emergency schema change

# Team member A tries to apply original plan

pgschema apply \

--host prod.db.com \

--db myapp \

--user postgres \

--plan plan.json

# ❌ Error: schema fingerprint mismatch detected - the database schema has changed since the plan was generated.

#

# schema fingerprint mismatch - expected: 965b1131737c955e, actual: abc123456789abcd

```

### Recover Fingerprint Mismatch

When a fingerprint mismatch occurs, the right approach is to reestablish the baseline with the current database state:

```bash

# 1. Get the latest schema from the current database

pgschema dump \

--host prod.db.com \

--db myapp \

--user postgres \

> current-schema.sql

# 2. Update your desired schema file with any new changes

# (merge your intended changes with the current state)

# 3. Plan the changes against the updated baseline

pgschema plan \

--host prod.db.com \

--db myapp \

--user postgres \

--file updated-schema.sql \

--output-json new-plan.json

# 4. Apply with the fresh plan

pgschema apply \

--host prod.db.com \

--db myapp \

--user postgres \

--plan new-plan.json

```

This fingerprinting mechanism maintains the integrity of the Plan-Review-Apply workflow, ensuring that concurrent modifications don't lead to unexpected or conflicting database changes.

## Online DDL Operations

The tool automatically uses PostgreSQL's non-blocking features to minimize downtime during schema changes, including concurrent index creation, NOT VALID constraint patterns, and safe NOT NULL additions.

**Example - Concurrent Index Creation**: Index operations automatically use `CREATE INDEX CONCURRENTLY` to avoid blocking table writes:

```sql

-- Generated migration for index addition

CREATE INDEX CONCURRENTLY IF NOT EXISTS idx_users_email_status ON users (email, status DESC);

-- pgschema:wait - Built-in progress monitoring

SELECT

COALESCE(i.indisvalid, false) as done,

CASE

WHEN p.blocks_total > 0 THEN p.blocks_done * 100 / p.blocks_total

ELSE 0

END as progress

FROM pg_class c

LEFT JOIN pg_index i ON c.oid = i.indexrelid

LEFT JOIN pg_stat_progress_create_index p ON c.oid = p.index_relid

WHERE c.relname = 'idx_users_email_status';

```

The `pgschema:wait` directive blocks migration execution, polls the database to monitor progress, and automatically continues when operations complete.

For comprehensive details, see the [Online DDL documentation](/workflow/online-ddl).

## Adaptive Transaction

The system automatically determines transaction boundaries based on the SQL operations in your migration plan. Each diff in the plan contains a `can_run_in_transaction` field that indicates whether that particular SQL can run inside a transaction.

```json plan.json snippet

"diffs": [

...

{

"sql": "ALTER TABLE department\nADD CONSTRAINT department_dept_name_key UNIQUE (dept_name);",

"type": "table.constraint",

"operation": "create",

"path": "public.department.department_dept_name_key",

"source": {

"schema": "public",

"table": "department",

"name": "department_dept_name_key",

"type": "UNIQUE",

"columns": [

{

"name": "dept_name",

"position": 1

}

]

},

"can_run_in_transaction": true <--------- transaction indication

},

...

]

```

### Smart Transaction Wrapping

* **If all diffs can run in a transaction**: The entire plan is wrapped in a single transaction for maximum atomicity

* **If any diff cannot run in a transaction**: Each diff runs in its own transaction to maintain isolation

Operating at the schema level, among all the DDL operations supported, only `CREATE INDEX CONCURRENTLY` cannot run inside a transaction.

## Modular Schema Organization

For large applications and teams, managing your database schema as a single monolithic file becomes unwieldy. The [modular](/workflow/modular-schema-files) approach transforms your schema into an organized structure that enables better collaboration, clearer ownership, and easier maintenance.

### Breaking Down the Monolith

Instead of a single large schema file, the tool can organize your database objects into logical, manageable files with the [`--multi-file`](/cli/dump#param-multi-file) option:

```bash

# Initialize modular structure from existing database

mkdir -p schema

pgschema dump \

--host localhost \

--db myapp \

--user postgres \

--multi-file \

--file schema/main.sql

# Examine the generated structure

tree schema/

```

This creates an organized drectory structure. The `main.sql` file serves as the entry point,

containing Postgres `\i` (include) directives that reference individual component files.

```

schema/

├── main.sql # Entry point with include directives

├── types/

│ ├── user_status.sql

│ ├── order_status.sql

│ └── address.sql

├── domains/

│ ├── email_address.sql

│ └── positive_integer.sql

├── sequences/

│ ├── global_id_seq.sql

│ └── order_number_seq.sql

├── tables/

│ ├── users.sql

│ └── orders.sql

├── functions/

│ ├── update_timestamp.sql

│ ├── get_user_count.sql

│ └── get_order_count.sql

├── procedures/

│ ├── cleanup_orders.sql

│ └── update_status.sql

└── views/

├── user_summary.sql

└── order_details.sql

```

```sql

-- Include custom types first (dependencies for tables)

\i types/user_status.sql

\i types/order_status.sql

\i types/address.sql

-- Include domain types (constrained base types)

\i domains/email_address.sql

\i domains/positive_integer.sql

-- Include sequences (may be used by tables)

\i sequences/global_id_seq.sql

\i sequences/order_number_seq.sql

-- Include core tables (with their constraints, indexes, and policies)

\i tables/users.sql

\i tables/orders.sql

-- Include functions and procedures

\i functions/update_timestamp.sql

\i functions/get_user_count.sql

\i procedures/cleanup_orders.sql

-- Include views (depend on tables and functions)

\i views/user_summary.sql

\i views/order_details.sql

```

```sql

--

-- Name: update_timestamp(); Type: FUNCTION; Schema: -; Owner: -

--

CREATE OR REPLACE FUNCTION update_timestamp()

RETURNS trigger

LANGUAGE plpgsql

SECURITY INVOKER

VOLATILE

AS $$

BEGIN

NEW.updated_at = CURRENT_TIMESTAMP;

RETURN NEW;

END;

$$;

```

### Team Collaboration Benefits

This modular approach enables powerful team collaboration patterns:

**Granular Ownership**: Use GitHub's CODEOWNERS to assign different teams to different parts of your schema:

```

# .github/CODEOWNERS

schema/tables/users* @user-team

schema/tables/orders* @orders-team

schema/tables/products* @inventory-team

schema/views/user_* @user-team

schema/views/order_* @orders-team

schema/functions/*inventory* @inventory-team

```

**Independent Development**: Teams can work on separate schema components without conflicts, test their changes in isolation, then combine for coordinated production deployments.

**Focused Code Reviews**: Instead of reviewing massive schema changes, reviewers can focus on specific files relevant to their domain expertise.

### Same Workflow, Better Organization

The declarative workflow remains identical - you're still defining desired state and letting the tool generate the migration plan:

```bash

# Teams edit their respective schema files to define desired state

# Example: Add new column to users table

vim schema/tables/users.sql

# Generate migration plan from modular schema

pgschema plan \

--host localhost \

--db myapp \

--user postgres \

--file schema/main.sql \

--output-human \

--output-json plan.json \

--output-sql plan.sql

# Apply the coordinated changes

pgschema apply \

--host localhost \

--db myapp \

--user postgres \

--plan plan.json

```

The multi-file approach scales from small teams working on focused components to large organizations managing complex schemas with hundreds of objects, while maintaining the same simple, declarative workflow.

## Dependency Management

As schemas grow in complexity, manually ordering DDL statements becomes increasingly error-prone. A single schema file with hundreds of objects becomes unmanageable—you're constantly scrolling and reordering statements to satisfy dependencies.

The modular schema approach introduces even greater challenges. With schema files spread across directories, tracking dependencies between objects becomes nearly impossible. A trigger in `triggers/audit.sql` might depend on a function in `functions/timestamps.sql`, which itself references types defined in `types/custom.sql`.

Database objects often depend on each other—triggers need functions, views reference tables, foreign keys require referenced tables to exist first. The tool automatically handles these dependencies using topological sorting to ensure operations execute in the correct order, regardless of how your schema files are organized.

### Automatic Dependency Resolution

Consider this modular schema example where you're adding a table with a trigger that depends on a function

```sql

-- tables/users.sql

CREATE TABLE public.users (

id serial PRIMARY KEY,

name text NOT NULL,

email text UNIQUE,

created_at timestamp DEFAULT CURRENT_TIMESTAMP,

updated_at timestamp DEFAULT CURRENT_TIMESTAMP

);

-- triggers/update_users_modified_time.sql

CREATE TRIGGER update_users_modified_time

BEFORE UPDATE ON public.users

FOR EACH ROW

EXECUTE FUNCTION public.update_modified_time();

-- functions/update_modified_time.sql

CREATE OR REPLACE FUNCTION public.update_modified_time()

RETURNS trigger AS $$

BEGIN

NEW.updated_at = CURRENT_TIMESTAMP;

RETURN NEW;

END;

$$ LANGUAGE plpgsql;

```

```sql

-- pgschema automatically orders operations correctly:

CREATE TABLE IF NOT EXISTS users (

id SERIAL PRIMARY KEY,

name text NOT NULL,

email text UNIQUE,

created_at timestamp DEFAULT CURRENT_TIMESTAMP,

updated_at timestamp DEFAULT CURRENT_TIMESTAMP

);

CREATE OR REPLACE FUNCTION update_modified_time()

RETURNS trigger

LANGUAGE plpgsql

SECURITY INVOKER

VOLATILE

AS $$

BEGIN

NEW.updated_at = CURRENT_TIMESTAMP;

RETURN NEW;

END;

$$;

CREATE OR REPLACE TRIGGER update_users_modified_time

BEFORE UPDATE ON users

FOR EACH ROW

EXECUTE FUNCTION update_modified_time();

```

The topological sort algorithm ensures that:

* Tables are created before their indexes, constraints, and triggers

* Functions are created before triggers that use them

* Referenced tables exist before foreign key constraints

* Views are created after all their dependent tables and functions

This automatic dependency management eliminates manual ordering errors and ensures migrations always execute successfully.

## No Shadow Database Required

Unlike many declarative schema migration tools, this approach doesn't require a separate "shadow" or "dev" database to compute schema differences. Other tools typically create a temporary database, apply your desired schema to it, then compare this shadow database with your target database to generate migrations.

This shadow database approach has several drawbacks:

* **Counterintuitive Configuration**: Most developers stumble on the additional shadow database flags and need to read documentation to understand what they're used for

* **Complex Setup**: Requires permissions for temporary database creation and cleanup of temporary databases

* **Additional Infrastructure**: Demands extra database resources, maintenance, and can cause inconsistencies with different Postgres versions or extensions

### IR Normalization

This tool eliminates these complexities by working directly with your schema files and target database through an **Intermediate Representation (IR)** system:

```plain

SQL Files Database System Catalogs

v v

(Desired) (Current)

| |

| Parse | Query

v v

IR

(Normalized)

|

v

Diff Engine

|

v

Migration Plan

```

**1. SQL Parsing**: Your desired state SQL files are parsed and converted into a normalized Intermediate Representation.

**2. Database Introspection**: The target database schema is introspected through Postgres system catalogs (`pg_class`, `pg_attribute`, `pg_constraint`, etc.) and converted into the same normalized IR format.

**3. Direct Comparison**: The diff engine compares these two normalized IR structures directly, identifying what changes are needed without requiring any temporary databases.

**4. Migration Generation**: The differences are converted into properly ordered DDL statements that transform the current state into the desired state.

The IR normalization ensures that regardless of whether schema information comes from SQL parsing or database introspection, it's represented consistently for accurate comparison - enabling precise migrations without the overhead and complexity of shadow databases.

## Acknowledgements

`pgschema` wouldn't exist without these amazing open source projects:

* [sqlc](https://github.com/sqlc-dev/sqlc) - For generating code to extract schema from target databases

* [pg\_query\_go](https://github.com/pganalyze/pg_query_go) - Go bindings for libpg\_query, enabling reliable Postgres SQL parsing. In fact, sqlc also uses pg\_query\_go under the hood! It's the secret weapon in the Postgres ecosystem that allows tools to build near-perfect Postgres compatibility.

* [testcontainers](https://github.com/testcontainers/) - Enabling comprehensive integration testing with real Postgres instances

**Design reference** came from:

* [Terraform](https://github.com/hashicorp/terraform) - For figuring out the right Plan-Review-Apply workflow that developers love

* [pg-schema-diff](https://github.com/stripe/pg-schema-diff) - Reading GitHub issues to understand use cases and studying the codebase. For example, I knew sqlc beforehand, but when I saw pg-schema-diff uses sqlc to fetch schema information, it immediately clicked.

And the real inspiration came from hundreds of customer conversations over 4+ years building [Bytebase](https://www.bytebase.com). Developers kept telling us: "Why can't database migrations be as simple as Terraform?" Well, now they can be.

Special thanks to Claude Code for sweating millions of tokens to make this project possible. Turns out AI is pretty good at writing database migration tools – who knew? 🤖

***

Ready to give it a try?

* [Installation](/installation)

* [Join Discord](https://discord.gg/pgschema) or open [GitHub Issues](https://github.com/pgschema/pgschema/issues)

# Apply

Source: https://www.pgschema.com/cli/apply

The `apply` command applies database schema changes to a target database schema. You can either provide a desired state file to generate and apply a plan, or execute a pre-generated plan file directly.

## Overview

The apply command supports two execution modes:

### File Mode (Generate and Apply)

1. Read the desired state from a SQL file (with include directive support)

2. Compare it with the current database state of the target schema

3. Generate a migration plan with proper dependency ordering

4. Display the plan for review

5. Apply the changes (with optional confirmation and safety checks)

### Plan Mode (Execute Pre-generated Plan)

1. Load a pre-generated plan from JSON file

2. Validate plan version compatibility and schema fingerprints

3. Display the plan for review

4. Apply the changes (with optional confirmation and safety checks)

## Basic Usage

```bash

# File mode: Generate and apply plan from desired state file

pgschema apply --host localhost --db myapp --user postgres --password mypassword --file schema.sql

# Plan mode: Execute pre-generated plan

pgschema apply --host localhost --db myapp --user postgres --password mypassword --plan plan.json

# Auto-approve mode for CI/CD (no confirmation prompt)

pgschema apply --host localhost --db myapp --user postgres --password mypassword --file schema.sql --auto-approve

```

## Connection Options

Database server host

Database server port

Database name

Database user name

Database password (can also use PGPASSWORD environment variable)

You can provide the password in two ways:

```bash Environment Variable (Recommended)

PGPASSWORD=mypassword pgschema apply \

--host localhost \

--db myapp \

--user postgres \

--file schema.sql

```

```bash Command Line Flag

pgschema apply \

--host localhost \

--db myapp \

--user postgres \

--password mypassword \

--file schema.sql

```

Schema name to apply changes to

## Apply Options

Path to desired state SQL schema file (mutually exclusive with --plan)

Used in File Mode to generate and apply a plan from the desired state.

Path to pre-generated plan JSON file (mutually exclusive with --file)

Used in Plan Mode to execute a plan that was previously generated with `pgschema plan --output-json`.

Apply changes without prompting for approval

Useful for automated deployments and CI/CD pipelines.

Disable colored output in the plan display

Useful for scripts, CI/CD environments, or terminals that don't support colors.

Maximum time to wait for database locks (e.g., '30s', '5m', '1h')

If not specified, uses PostgreSQL's default behavior (wait indefinitely).

See [PostgreSQL lock\_timeout documentation](https://www.postgresql.org/docs/current/runtime-config-client.html#GUC-LOCK-TIMEOUT).

Application name for database connection (visible in pg\_stat\_activity)

See [PostgreSQL application\_name documentation](https://www.postgresql.org/docs/current/libpq-connect.html#LIBPQ-CONNECT-APPLICATION-NAME).

## Examples

### File Mode (Generate and Apply)

```bash

# Interactive mode - shows plan and prompts for confirmation

pgschema apply --host localhost --db myapp --user postgres --file desired_schema.sql

```

This will:

1. Generate a migration plan by comparing the desired state with current database

2. Display the plan with colored output

3. Prompt: "Do you want to apply these changes? (yes/no):"

4. Wait for confirmation before proceeding

5. Apply changes using transactions where possible

Example output:

```

Plan: 2 to add, 1 to modify.

Summary by type:

tables: 1 to add, 1 to modify

indexes: 1 to add

Tables:

+ posts

~ users

+ email (column)

Indexes:

+ idx_users_email

Transaction: true

DDL to be executed:

--------------------------------------------------

CREATE TABLE IF NOT EXISTS posts (

id SERIAL PRIMARY KEY,

title TEXT NOT NULL,

user_id INTEGER REFERENCES users(id)

);

ALTER TABLE users ADD COLUMN email VARCHAR(255) UNIQUE;

CREATE INDEX idx_users_email ON users(email);

Do you want to apply these changes? (yes/no):

```

### Plan Mode (Execute Pre-generated Plan)

```bash

# First, generate a plan

pgschema plan --host localhost --db myapp --user postgres --file schema.sql --output-json plan.json

# Then apply the pre-generated plan

pgschema apply --host localhost --db myapp --user postgres --plan plan.json

```

Benefits of plan mode:

* **Separation of concerns**: Generate plans in one environment, apply in another

* **Review process**: Plans can be reviewed before deployment

* **Repeatability**: Same plan can be applied to multiple environments

* **Version control**: Plans can be stored and versioned

* **Rollback preparation**: Generate rollback plans before applying changes

### Auto-approve for CI/CD

```bash

pgschema apply \

--host prod-db \

--db myapp \

--user deployer \

--file schema.sql \

--auto-approve \

--no-color

```

### With Lock Timeout

```bash

# Prevent blocking on long-running locks

pgschema apply \

--host localhost \

--db myapp \

--user postgres \

--file schema.sql \

--lock-timeout "30s"

```

### Custom Application Name

```bash

pgschema apply \

--host localhost \

--db myapp \

--user postgres \

--file schema.sql \

--application-name "pgschema-deployment-v1.1.0"

```

```sql

-- Monitor active pgschema connections:

SELECT application_name, state, query_start, query

FROM pg_stat_activity

WHERE application_name LIKE 'pgschema%';

```

## Safety Features

### Schema Fingerprint Validation

When using plan mode, pgschema validates that the database schema hasn't changed since the plan was generated:

```bash

# Generate plan

pgschema plan --host localhost --db myapp --user postgres --file schema.sql --output-json plan.json

# Database schema changes here...

# Apply plan - will detect changes and fail

pgschema apply --host localhost --db myapp --user postgres --plan plan.json

```

If schema changes are detected:

```

Error: schema fingerprint mismatch detected - the database schema has changed since the plan was generated.

Expected fingerprint: abc123...

Current fingerprint: def456...

Difference: Table 'users' was modified

To resolve this issue:

1. Regenerate the plan with current database state: pgschema plan ...

2. Review the new plan to ensure it's still correct

3. Apply the new plan: pgschema apply ...

```

### Version Compatibility

Plans include version information to ensure compatibility:

* **pgschema version**: Must match the version used to generate the plan

* **Plan format version**: Must be supported by the current pgschema version

### Transaction Handling

pgschema automatically determines whether changes can run in a transaction:

* **Transactional mode** (default): All changes run in a single transaction with automatic rollback on failure

* **Non-transactional mode**: Some operations (like `CREATE INDEX CONCURRENTLY`) run outside transactions

```

Transaction: true # Changes will run in a transaction

Transaction: false # Some changes cannot run in a transaction

```

### No-op Detection

If no changes are needed, pgschema skips execution:

```

No changes to apply. Database schema is already up to date.

```

# Dump

Source: https://www.pgschema.com/cli/dump

The `dump` command extracts a PostgreSQL database schema for a specific schema and outputs it in a developer-friendly format. The dumped schema serves as a baseline that developers can modify and apply to target databases using the `plan` and `apply` commands.

## Overview

The dump command provides comprehensive schema extraction with:

1. Single schema targeting (defaults to 'public')

2. Dependency-aware object ordering

3. Cross-schema reference handling with smart qualification

4. Developer-friendly SQL output format

5. Single-file and multi-file organization options

## Basic Usage

```bash

# Dump default schema (public)

pgschema dump --host localhost --db myapp --user postgres --password mypassword

# Dump specific schema

pgschema dump --host localhost --db myapp --user postgres --password mypassword --schema myschema

# Save to file

pgschema dump --host localhost --db myapp --user postgres --password mypassword > schema.sql

# Multi-file organized output

pgschema dump --host localhost --db myapp --user postgres --password mypassword --multi-file --file schema.sql

```

## Integration with Plan/Apply

```bash

# 1. Dump current production schema

pgschema dump --host prod-host --db myapp --user postgres --schema public > current.sql

# 2. Make modifications to current.sql (now desired state)

# 3. Plan changes against staging

pgschema plan --host staging-host --db myapp --user postgres --file current.sql

# 4. Apply changes if plan looks good

pgschema apply --host staging-host --db myapp --user postgres --file current.sql

```

## Connection Options

Database server host

Database server port

Database name

Database user name

Database password (can also use PGPASSWORD environment variable)

You can provide the password in two ways:

```bash Environment Variable (Recommended)

PGPASSWORD=mypassword pgschema dump \

--host localhost \

--db myapp \

--user postgres

```

```bash Command Line Flag

pgschema dump \

--host localhost \

--db myapp \

--user postgres \

--password mypassword

```

Schema name to dump

## Output Options

Output schema to multiple files organized by object type. See [Multi-file Schema Management Workflow](/workflow/multi-file-schema).

When enabled, creates a structured directory with:

* Main file with header and include statements

* Separate directories for different object types (tables, views, functions, etc.)

* Each database object in its own file

Requires `--file` to specify the main output file path.

Output file path (required when --multi-file is used)

For single-file mode, this is optional (defaults to stdout).

For multi-file mode, this specifies the main file path.

## Examples

### Schema Dump

```bash

# Dump default schema (public)

pgschema dump --host localhost --db myapp --user postgres

# Dump specific schema

pgschema dump --host localhost --db myapp --user postgres --schema analytics

```

Example output:

```sql

--

-- pgschema database dump

--

-- Dumped from database version PostgreSQL 17.5

-- Dumped by pgschema version 1.0.0

--

-- Name: users; Type: TABLE; Schema: -; Owner: -

--

CREATE TABLE IF NOT EXISTS users (

id SERIAL PRIMARY KEY,

name VARCHAR(255) NOT NULL,

email VARCHAR(255) UNIQUE,

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP

);

--

-- Name: idx_users_email; Type: INDEX; Schema: -; Owner: -

--

CREATE INDEX IF NOT EXISTS idx_users_email ON users(email);

```

### Multi-File Output

```bash

pgschema dump \

--host localhost \

--db myapp \

--user postgres \

--multi-file \

--file schema.sql

```

This creates a structured directory layout:

```

schema.sql # Main file with header and includes

├── tables/

│ ├── users.sql

│ ├── orders.sql

│ └── products.sql

├── views/

│ └── user_stats.sql

├── functions/

│ └── update_timestamp.sql

└── procedures/

└── cleanup_old_data.sql

```

The main `schema.sql` file contains:

```sql

--

-- pgschema database dump

--

-- Dumped from database version PostgreSQL 17.5

-- Dumped by pgschema version 1.0.0

\i tables/users.sql

\i tables/orders.sql

\i tables/products.sql

\i views/user_stats.sql

\i functions/update_timestamp.sql

\i procedures/cleanup_old_data.sql

```

Each individual file (e.g., `tables/users.sql`) contains the specific object definition:

```sql

--

-- Name: users; Type: TABLE; Schema: -; Owner: -

--

CREATE TABLE IF NOT EXISTS users (

id SERIAL PRIMARY KEY,

name VARCHAR(255) NOT NULL,

email VARCHAR(255) UNIQUE,

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP

);

--

-- Name: idx_users_email; Type: INDEX; Schema: -; Owner: -

--

CREATE INDEX IF NOT EXISTS idx_users_email ON users(email);

--

-- Name: users; Type: RLS; Schema: -; Owner: -

--

ALTER TABLE users ENABLE ROW LEVEL SECURITY;

```

## Schema Qualification

`pgschema` uses smart schema qualification to make dumps portable:

* **Objects within the dumped schema**: No schema qualifier added

* **Objects from other schemas**: Fully qualified with schema name

This approach makes the dump suitable as a baseline that can be applied to different schemas, particularly useful for multi-tenant applications.

```bash

# Dump the 'public' schema

pgschema dump --host localhost --db myapp --user postgres --schema public

```

Output for objects within 'public' schema (no qualification):

```sql

-- Objects in the dumped schema have no qualifier

CREATE TABLE users (

id SERIAL PRIMARY KEY,

name VARCHAR(255) NOT NULL

);

CREATE TABLE orders (

id SERIAL PRIMARY KEY,

user_id INTEGER REFERENCES users(id), -- Same schema, no qualifier

product_id INTEGER REFERENCES catalog.products(id) -- Different schema, qualified

);

```

This qualification strategy enables using one schema as a template for multiple tenants:

```bash

# 1. Dump the template schema

pgschema dump --host localhost --db myapp --user postgres --schema template > template.sql

# 2. Apply to different tenant schemas

pgschema apply --host localhost --db myapp --user postgres --schema tenant1 --file template.sql

pgschema apply --host localhost --db myapp --user postgres --schema tenant2 --file template.sql

pgschema apply --host localhost --db myapp --user postgres --schema tenant3 --file template.sql

```

Because objects within the schema are not qualified, they will be created in whichever schema you specify during the apply command.

# Plan

Source: https://www.pgschema.com/cli/plan

The `plan` command generates a migration plan to apply a desired schema state to a target database schema. It compares the desired state (from a file) with the current state of a specific schema and shows what changes would be applied.

## Overview

The plan command follows infrastructure-as-code principles similar to Terraform:

1. Read the desired state from a SQL file (with include directive support)

2. Connect to the target database and analyze current state of the specified schema

3. Compare the two states

4. Generate a detailed migration plan with proper dependency ordering

5. Display the plan without making any changes

## Basic Usage

```bash

# Generate plan to apply schema.sql to the target database

pgschema plan --host localhost --db myapp --user postgres --password mypassword --file schema.sql

# Plan with specific schema

pgschema plan --host localhost --db myapp --user postgres --password mypassword --schema myschema --file schema.sql

# Generate JSON output for automation

pgschema plan --host localhost --db myapp --user postgres --password mypassword --file schema.sql --output-json stdout

# Generate SQL migration script

pgschema plan --host localhost --db myapp --user postgres --password mypassword --file schema.sql --output-sql stdout

# Save multiple output formats simultaneously

pgschema plan --host localhost --db myapp --user postgres --password mypassword --file schema.sql \

--output-human plan.txt --output-json plan.json --output-sql migration.sql

# Disable colored output (useful for scripts or CI/CD)

pgschema plan --host localhost --db myapp --user postgres --password mypassword --file schema.sql --no-color

```

## Connection Options

Database server host

Database server port

Database name

Database user name

Database password (can also use PGPASSWORD environment variable)

You can provide the password in two ways:

```bash Environment Variable (Recommended)

PGPASSWORD=mypassword pgschema plan \

--host localhost \

--db myapp \

--user postgres \

--file schema.sql

```

```bash Command Line Flag

pgschema plan \

--host localhost \

--db myapp \

--user postgres \

--password mypassword \

--file schema.sql

```

Schema name to target for comparison

## Plan Options

Path to desired state SQL schema file

Output human-readable format to stdout or file path

Examples:

* `--output-human stdout` - Display to terminal

* `--output-human plan.txt` - Save to file

Output JSON format to stdout or file path

This JSON format is the same format accepted by the [apply command](/cli/apply) for executing migration plans.

Examples:

* `--output-json stdout` - Display to terminal

* `--output-json plan.json` - Save to file

Output SQL format to stdout or file path

Examples:

* `--output-sql stdout` - Display to terminal

* `--output-sql migration.sql` - Save to file

Disable colored output for human format when writing to stdout

This is useful for:

* Scripts and automation that need to parse output

* CI/CD environments that don't support color codes

* Redirecting output to files where color codes are unwanted

Note: This flag only affects human format output to stdout. File output and JSON/SQL formats are never colored.

## Examples

### Default Human-Readable Output

```bash

pgschema plan --host localhost --db myapp --user postgres --file schema.sql

```

```

Plan: 2 to add, 1 to modify, 1 to drop.

Summary by type:

tables: 2 to add, 1 to modify, 1 to drop

indexes: 1 to add

functions: 1 to add

Tables:

+ users

+ posts

~ products

+ discount_rate (column)

- old_price (column)

- legacy_data

Indexes:

+ idx_users_email

Functions:

+ update_timestamp()

Transaction: true

DDL to be executed:

--------------------------------------------------

CREATE TABLE IF NOT EXISTS users (

id SERIAL PRIMARY KEY,

name VARCHAR(255) NOT NULL,

email VARCHAR(255) UNIQUE

);

CREATE TABLE IF NOT EXISTS posts (

id SERIAL PRIMARY KEY,

title TEXT NOT NULL,

user_id INTEGER REFERENCES users(id)

);

CREATE INDEX idx_users_email ON users(email);

CREATE FUNCTION update_timestamp() RETURNS TRIGGER AS $$

BEGIN

NEW.updated_at = NOW();

RETURN NEW;

END;

$$ LANGUAGE plpgsql;

ALTER TABLE products ADD COLUMN discount_rate numeric(5,2);

ALTER TABLE products DROP COLUMN old_price;

DROP TABLE legacy_data;

```

### JSON Output for Automation

```bash

pgschema plan \

--host localhost \

--db myapp \

--user postgres \

--file schema.sql \

--output-json stdout

```

```json

{

"version": "1.0.0",

"pgschema_version": "1.0.0",

"created_at": "2025-01-15T10:30:00Z",

"source_fingerprint": {

"hash": "abc123def456..."

},

"diffs": [

{

"sql": "CREATE TABLE IF NOT EXISTS users (\n id SERIAL PRIMARY KEY,\n name VARCHAR(255) NOT NULL,\n email VARCHAR(255) UNIQUE\n);",

"type": "table",

"operation": "create",

"path": "public.users",

"source": {

"schema": "public",

"name": "users",

"type": "BASE_TABLE",

"columns": [

{

"name": "id",

"position": 1,

"data_type": "integer",

"is_nullable": false,

"default_value": "nextval('users_id_seq'::regclass)"

},

{

"name": "name",

"position": 2,

"data_type": "character varying",

"is_nullable": false,

"character_maximum_length": 255

}

],

"constraints": {

"users_pkey": {

"schema": "public",

"table": "users",

"name": "users_pkey",

"type": "PRIMARY_KEY",

"columns": [

{

"name": "id",

"position": 1

}

]

}

}

},

"can_run_in_transaction": true

}

]

}

```

### SQL Migration Script

```bash

pgschema plan \

--host localhost \

--db myapp \

--user postgres \

--file schema.sql \

--output-sql stdout

```

```sql

CREATE TABLE IF NOT EXISTS users (

id SERIAL PRIMARY KEY,

name VARCHAR(255) NOT NULL,

email VARCHAR(255) UNIQUE

);

CREATE TABLE IF NOT EXISTS posts (

id SERIAL PRIMARY KEY,

title TEXT NOT NULL,

user_id INTEGER REFERENCES users(id)

);

CREATE INDEX idx_users_email ON users(email);

CREATE FUNCTION update_timestamp() RETURNS TRIGGER AS $$

BEGIN

NEW.updated_at = NOW();

RETURN NEW;

END;

$$ LANGUAGE plpgsql;

ALTER TABLE products ADD COLUMN discount_rate numeric(5,2);

ALTER TABLE products DROP COLUMN old_price;

DROP TABLE legacy_data;

```

### Plan for Specific Schema

```bash

pgschema plan \

--host localhost \

--db multi_tenant \

--user postgres \

--schema tenant1 \

--file tenant_schema.sql

```

## Use Cases

### Pre-deployment Validation

```bash

# Check what changes will be applied before deployment

pgschema plan \

--host prod-db \

--db myapp \

--user readonly \

--file new_schema.sql

# If plan looks good, proceed with apply

pgschema apply \

--host prod-db \

--db myapp \

--user deployer \

--file new_schema.sql

```

### CI/CD Integration

```yaml

# GitHub Actions example

- name: Validate Schema Changes

run: |

pgschema plan \

--host ${{ secrets.DB_HOST }} \

--db ${{ secrets.DB_NAME }} \

--user ${{ secrets.DB_USER }} \

--file schema/proposed.sql \

--output-json plan.json \

--no-color

# Check if there are destructive changes

if jq -e '.summary.to_destroy > 0' plan.json; then

echo "Warning: Destructive changes detected!"

exit 1

fi

```

### Change Tracking

```bash

# Generate plan and save for audit

DATE=$(date +%Y%m%d_%H%M%S)

pgschema plan \

--host localhost \

--db myapp \

--user postgres \

--file schema.sql \

--output-sql "migrations/plan_${DATE}.sql"

```

### Multiple Output Formats

You can generate multiple output formats simultaneously:

```bash

# Save all formats for comprehensive documentation

pgschema plan \

--host localhost \

--db myapp \

--user postgres \

--file schema.sql \

--output-human plan.txt \

--output-json plan.json \

--output-sql migration.sql

# Display human-readable format and save JSON for automation

pgschema plan \

--host localhost \

--db myapp \

--user postgres \

--file schema.sql \

--output-human stdout \

--output-json automation/plan.json \

--no-color

```

Note: Only one output format can use `stdout`. If no output flags are specified, the command defaults to human-readable output to stdout with colors enabled.

## Comparison Direction

The plan command is **unidirectional**: it always plans changes from the current state (database) to the desired state (file).

```

Current State (Database) → Desired State (File)

```

This ensures:

* Consistent infrastructure-as-code workflow

* Clear source of truth (the file)

* Predictable change direction

## Include Directive Support

The plan command supports include directives in schema files, allowing you to organize your schema across multiple files:

```sql

-- main.sql

\i tables/users.sql

\i tables/posts.sql

\i functions/triggers.sql

```

The include processor will resolve all includes relative to the directory containing the main schema file.

# For Coding Agent

Source: https://www.pgschema.com/coding-agent

This guide shows how to integrate pgschema with AI coding agents.

## llms.txt

pgschema provides official llms.txt files for AI agents that support [llms.txt](https://llmstxt.org/) context:

* **Concise version**: [pgschema.com/llms.txt](https://www.pgschema.com/llms.txt) - Essential commands and patterns

* **Full version**: [pgschema.com/llms-full.txt](https://www.pgschema.com/llms-full.txt) - Complete API reference and examples

AI agents can automatically fetch these context files to understand pgschema's core workflow: dump → edit → plan → apply.

## CLAUDE.md / AGENTS.md

For optimal code integration, add this configuration to your [CLAUDE.md](https://claude.md) or [AGENTS.md](https://agents.md/):

````markdown

## Project Overview

`pgschema` is a CLI tool that brings Terraform-style declarative schema migration workflow to PostgreSQL. It provides a dump/edit/plan/apply workflow for database schema changes:

- **Dump**: Extract current schema in a developer-friendly format

- **Edit**: Modify schema files to represent desired state

- **Plan**: Compare desired state with current database and generate migration plan

- **Apply**: Execute the migration with safety features like concurrent change detection

The tool supports PostgreSQL versions 14-17 and handles most common schema objects (tables, indexes, functions, views, constraints, etc.).

## Core Workflow

### 1. Dump Current Schema

Extract the current database schema in a clean, developer-friendly format:

```bash

# Basic dump

PGPASSWORD=password pgschema dump --host localhost --db myapp --user postgres > schema.sql

# Multi-file output for large schemas

pgschema dump --host localhost --db myapp --user postgres --multi-file --file schema.sql

# Specific schema (not public)

pgschema dump --host localhost --db myapp --user postgres --schema tenant1 > schema.sql

```

### 2. Edit Schema Files

Modify the dumped schema files to represent your desired state. pgschema compares the desired state (files) with current state (database) to generate migrations.

### 3. Generate Migration Plan

Preview what changes will be applied:

```bash

# Generate plan

PGPASSWORD=password pgschema plan --host localhost --db myapp --user postgres --file schema.sql

# Save plan in multiple formats

pgschema plan --host localhost --db myapp --user postgres --file schema.sql \

--output-human plan.txt --output-json plan.json --output-sql migration.sql

```

### 4. Apply Changes

Execute the migration:

```bash

# Apply from file (generate plan and apply)

PGPASSWORD=password pgschema apply --host localhost --db myapp --user postgres --file schema.sql

# Apply from pre-generated plan

pgschema apply --host localhost --db myapp --user postgres --plan plan.json

# Auto-approve for CI/CD

pgschema apply --host localhost --db myapp --user postgres --file schema.sql --auto-approve

```

## Key Features

### Connection Options

Use these connection parameters for all commands:

- `--host` - Database host (default: localhost)

- `--port` - Database port (default: 5432)

- `--db` - Database name (required)

- `--user` - Database user (required)

- `--password` - Database password (or use PGPASSWORD environment variable)

- `--schema` - Target schema (default: public)

### Multi-File Schema Management

For large schemas, use `--multi-file` to organize by object type:

```bash

pgschema dump --host localhost --db myapp --user postgres --multi-file --file schema/main.sql

```

Creates organized directory structure:

```

schema/

├── main.sql # Contains \i include directives

├── tables/

├── views/

├── functions/

└── indexes/

```

### Online DDL Support

pgschema automatically uses safe patterns for production:

- `CREATE INDEX CONCURRENTLY` for new indexes

- `NOT VALID` + `VALIDATE CONSTRAINT` for constraints

- Progress monitoring for long-running operations

### Safety Features

- **Schema fingerprinting**: Detects concurrent schema changes

- **Transaction handling**: Automatic rollback on failure

- **Dependency ordering**: Objects created/dropped in correct order

- **Lock timeout control**: `--lock-timeout` for production safety

## Common Use Cases

### Development Workflow

```bash

# 1. Dump production schema

pgschema dump --host prod --db myapp --user postgres > baseline.sql

# 2. Make changes to baseline.sql (desired state)

# 3. Test against staging

pgschema plan --host staging --db myapp --user postgres --file baseline.sql

# 4. Apply to staging

pgschema apply --host staging --db myapp --user postgres --file baseline.sql

```

### Multi-Tenant Schema Management

```bash

# Dump template schema

pgschema dump --host localhost --db myapp --user postgres --schema template > template.sql

# Apply to multiple tenants

pgschema apply --host localhost --db myapp --user postgres --schema tenant1 --file template.sql

pgschema apply --host localhost --db myapp --user postgres --schema tenant2 --file template.sql

```

### CI/CD Integration

```bash

# Generate and save plan for review

pgschema plan --host prod --db myapp --user postgres --file schema.sql \

--output-json plan.json --no-color

# Apply in deployment

pgschema apply --host prod --db myapp --user postgres --plan plan.json --auto-approve

```

## Supported Schema Objects

pgschema handles most common PostgreSQL schema objects:

- **Tables**: Full DDL including columns, constraints, defaults

- **Indexes**: Regular and partial indexes with concurrent creation

- **Views**: Regular and materialized views

- **Functions**: PL/pgSQL and SQL functions with all options

- **Procedures**: Stored procedures

- **Triggers**: Table triggers with dependency handling

- **Types**: Custom types (enum, composite, domain)

- **Constraints**: Primary keys, foreign keys, unique, check constraints

- **Sequences**: Auto-generated and custom sequences

- **Policies**: Row-level security policies

- **Comments**: Comments on all supported objects

For unsupported objects, see the documentation.

## Additional Resources

- **Concise reference**: [pgschema.com/llms.txt](https://www.pgschema.com/llms.txt) - Essential commands and patterns

- **Complete reference**: [pgschema.com/llms-full.txt](https://www.pgschema.com/llms-full.txt) - Full API reference and examples

- **Documentation**: [pgschema.com](https://www.pgschema.com) - Complete documentation

````

# FAQ

Source: https://www.pgschema.com/faq

## Getting Help

* Report bugs or request features? 👉 [GitHub Issues](https://github.com/pgschema/pgschema/issues)

* Community for pgschema users? 👉 Join [Discord](https://discord.gg/rvgZCYuJG4)

* Need commercial support? 👉 [Contact us](mailto:support@bytebase.com)

## Compatibility

### Which PostgreSQL versions are supported?

pgschema is tested with PostgreSQL versions 14, 15, 16, and 17. While it may work with older versions, we recommend using one of these tested versions for the best experience.

### Can I use pgschema with cloud PostgreSQL services?

Yes, pgschema works with any PostgreSQL-compatible database that you can connect to, including:

* Amazon RDS for PostgreSQL

* Google Cloud SQL for PostgreSQL

* Azure Database for PostgreSQL

* Supabase

* Neon

* And other PostgreSQL-compatible services

### How do I handle database-specific settings?

pgschema focuses on schema structure (tables, indexes, functions, etc.) and doesn't manage:

* Database-level settings

* User/role management

* Tablespace configuration

* Extensions

These should be managed separately through your infrastructure tooling. See [unsupported syntax](/syntax/unsupported).

### What happens if a migration fails?

1. For most changes, pgschema executes them in a transaction and will roll back on failure

2. For changes containing non-transactional statements (like `CREATE INDEX CONCURRENTLY`), each statement runs in its own transaction

3. Your database will remain in its previous state for transactional changes. When migrations include non-transactional DDL statements, partial application may occur if a later statement fails

4. You'll see a clear error message indicating what went wrong

5. Fix the issue in your schema file and try again

## Troubleshooting

### Why does pgschema show no changes when I expect some?

Common causes:

* The changes are in objects pgschema doesn't track yet

* The database already matches your desired state

* You're comparing against the wrong schema (check [`--schema`](/cli/plan#param-schema) flag)

Run with `--debug` flag for more detailed output.

### How do I debug connection issues?

For connection problems:

1. Verify PostgreSQL is running: `pg_isready -h host -p port`

2. Check your credentials and permissions

3. Ensure the database exists

4. Check network/firewall settings

5. Try connecting with `psql` using the same parameters

### What permissions does pgschema need?

pgschema needs:

* `CONNECT` privilege on the database

* `USAGE` privilege on the target schema

* `CREATE` privilege on the schema (for new objects)

* Appropriate privileges for all object types you're managing

For read-only operations (dump, plan), only `SELECT` privileges are needed.

## Advanced Topics

### How does pgschema handle dependencies?

pgschema automatically:

* Detects dependencies between database objects

* Orders DDL statements to respect dependencies

* Creates objects in the correct order

* Drops objects in reverse dependency order

### How does pgschema detect concurrent schema changes?

pgschema uses fingerprinting to detect if the database schema has changed since the migration plan was created:

* A fingerprint (hash) of the current schema is calculated during planning

* Before applying changes, pgschema verifies the fingerprint still matches

* If the schema has changed (concurrent modifications), the apply operation will fail safely

* This prevents conflicts and ensures migrations are applied to the expected schema state

### Can I use pgschema for database comparisons?

Yes! You can compare schemas across databases:

```bash

# Dump both schemas

pgschema dump --host db1 --db myapp > schema1.sql

pgschema dump --host db2 --db myapp > schema2.sql

# Compare with diff

diff schema1.sql schema2.sql

```

## License

See [pgschema Community License](https://github.com/pgschema/pgschema/blob/main/LICENSE).

**Q: Can I use pgschema for my startup?**\

Yes, if your company’s annual gross revenue is ≤ US \$10M and you are not competing with pgschema.

**Q: I’m a large company using pgschema internally. Do I need a license?**\

Yes, if your company’s total global annual gross revenue is > US \$10M.

**Q: What counts as “competing with pgschema”?**\

Offering software or services that are managing, transforming, or validating PostgreSQL schema for others.

**Q: How is the \$10M threshold calculated?**

* Total annual gross revenue before deductions

* Includes all affiliates, parents, and subsidiaries

* Based on the prior fiscal year

**Q: What if I want to remove these restrictions?**\

[Contact us](mailto:support@bytebase.com) for a commercial license.

# Introduction

Source: https://www.pgschema.com/index

Declarative schema migration for Postgres

export const AsciinemaPlayer = ({recordingId, title = "Terminal Recording", height}) =>

While database migrations have traditionally been imperative (writing step-by-step migration scripts), declarative solutions are gaining traction.

`pgschema` differentiates itself from other declarative tools by:

* **Comprehensive Postgres Support**: Handles virtually all schema-level database objects - tables, indexes, views, functions, procedures, triggers, policies, types, and more. Thoroughly tested against Postgres versions 14 through 17.

* **Schema-Level Focus**: Designed for real-world Postgres usage patterns, from single-schema applications to multi-tenant architectures.

* **Terraform-Like Plan-Review-Apply Workflow**: Generate a detailed migration plan before execution, review changes in human-readable, SQL, or JSON formats, then apply with confidence. No surprises in production.

* **Concurrent Change Detection**: Built-in fingerprinting ensures the database hasn't changed between planning and execution.

* **Online DDL Support**: Automatically uses PostgreSQL's non-blocking strategies to minimize downtime during schema changes.

* **Adaptive Transaction**: Intelligently wraps migrations in transactions when possible, with automatic handling of operations like concurrent index creation that require special treatment.

* **Modular Schema Organization**: Supports multi-file schema definitions for better team collaboration and ownership.

* **Dependency Management**: Automatically resolves complex dependencies between database objects using topological sorting, ensuring operations execute in the correct order.

* **No Shadow Database Required**: Unlike other declarative tools, it works directly with your schema files and target database - no temporary databases, no extra infrastructure.

## Comprehensive Postgres Support

`pgschema` supports a wide range of [Postgres features](/syntax/create_table), including:

* Tables, columns, and constraints (primary keys, foreign keys, unique constraints, check constraints)

* Indexes (including partial, functional, and concurrent indexes)

* Views and materialized views

* Functions and stored procedures

* Custom types and domains

* Schemas and permissions

* Row-level security (RLS) policies

* Triggers and sequences

* Comments on database objects

The tool is thoroughly tested against [Postgres versions 14 through 17](https://github.com/pgschema/pgschema/blob/a41ffe8616f430ba36e6b39982c4455632a0dfa7/.github/workflows/release.yml#L44-L47)

with [extensive test suites](https://github.com/pgschema/pgschema/tree/main/testdata).

## Schema-Level Migration

Unlike many migration tools that operate at the database level, `pgschema` is designed to work at the **schema level**.

### Why Schema-Level?

**Single Schema Simplicity**: Most Postgres applications use only the default `public` schema. For these cases, schema-level operations eliminate unnecessary complexity while providing all the functionality needed for effective schema management.

**Multi-Tenant Architecture Support**: For applications using multiple schemas, the predominant pattern is **schema-per-tenant architecture** - where each customer or tenant gets their own schema within the same database. This schema-level approach enables [tenant schema reconciliation](/workflow/tenant-schema) - ensuring all tenant schemas stay in sync with the canonical schema definition.

### Schema-Agnostic Migrations

The tool intelligently handles schema qualifiers to create portable, schema-agnostic dumps and migrations:

```bash

# Dump from 'tenant_123' schema

pgschema dump \

--host localhost \

--user postgres \

--db myapp \

--schema tenant_123 \

> schema.sql

# Apply the same schema definition to 'tenant_456'

pgschema plan \

--host localhost \

--user postgres \

--db myapp \

--schema tenant_456 \

--file schema.sql

```

Here's what a schema-level dump looks like - notice how it creates **schema-agnostic SQL**:

```sql

--

-- pgschema database dump

--

--

-- Name: users; Type: TABLE; Schema: -; Owner: -

--

CREATE TABLE IF NOT EXISTS users (

id SERIAL PRIMARY KEY,

username varchar(100) NOT NULL,

email varchar(100) NOT NULL,

role public.user_role DEFAULT 'user',

status public.status DEFAULT 'active',

created_at timestamp DEFAULT now()

);

--

-- Name: posts; Type: TABLE; Schema: -; Owner: -

--

CREATE TABLE IF NOT EXISTS posts (

id SERIAL PRIMARY KEY,

user_id integer REFERENCES users(id),

title varchar(200) NOT NULL,

content text,

created_at timestamp DEFAULT now()

);

```

The tool automatically:

* **Strips schema qualifiers** from table and index names (notice `Schema: -` in comments)

* **Removes schema prefixes** from object references (`users` instead of `tenant_123.users`)

* **Preserves cross-schema type references** where needed (`public.user_role` for shared types)

* **Creates portable DDL** that can be applied to any target schema

## Declarative Workflow

This workflow mirrors Terraform's approach and fits [GitOps](/workflow/gitops) nicely:

### 1. Dump: Extract Current Schema

```bash

pgschema dump \

--host localhost \

--user postgres \

--db myapp \

> schema.sql

```

This extracts your current database schema into a clean, readable SQL file that represents your current state.

### 2. Edit: Define Desired State

Edit the dumped schema file to reflect your desired changes. Instead of writing `ALTER TABLE` statements, you write `CREATE TABLE` definitions to specify the desired end state.

### 3. Plan: Preview Changes

```bash

pgschema plan \

--host localhost \

--user postgres \

--db myapp \

--file schema.sql \

--output-human \

--output-json plan.json \

--output-sql plan.sql

```

This compares your desired schema (from the file) with the current database state and generates a migration plan. Here's where the tool differentiates from Terraform—the plan supports multiple output formats:

The human-readable format is perfect for reviewing changes during development

```

Plan: 1 to alter.

Summary by type:

tables: 1 to alter

Tables:

~ users

Transaction: true

DDL to be executed:

--------------------------------------------------

ALTER TABLE users ADD COLUMN name varchar(100);

```

The JSON format enables easy integration with CI/CD pipelines and approval workflows

```json

{

"version": "1.0.0",

"pgschema_version": "1.0.0",

"created_at": "2025-08-13T10:30:15+08:00",

"source_fingerprint": {

"hash": "7a8b9c1d2e3f4a5b6c7d8e9f0a1b2c3d4e5f6a7b8c9d0e1f2a3b4c5d6e7f8a9b"

},

"diffs": [

{

"sql": "ALTER TABLE users ADD COLUMN name varchar(100);",

"type": "table",

"operation": "alter",

"path": "public.users",

"source": {

"schema": "public",

"name": "users",

"type": "BASE_TABLE",

"columns": [

{

"name": "id",

"position": 1,

"data_type": "integer",

"is_nullable": false

},

{

"name": "email",

"position": 2,

"data_type": "character varying",

"character_maximum_length": 255,

"is_nullable": false

},

{

"name": "created_at",

"position": 3,

"data_type": "timestamp without time zone",

"is_nullable": true,

"column_default": "now()"

},

{

"name": "name",

"position": 4,

"data_type": "character varying",

"character_maximum_length": 100,

"is_nullable": true

}

],

"can_run_in_transaction": true

}

}

]

}

```

The SQL format shows the exact SQL to be executed, enabling custom tooling to inspect and analyze the changes

```sql

ALTER TABLE users ADD COLUMN name varchar(100);

```

### 4. Apply: Execute Changes

```bash

pgschema apply \

--plan plan.json \

--host localhost \

--user postgres \

--db myapp

```

This executes the planned changes safely, with built-in protections against concurrent schema modifications and proper transaction handling.

## Concurrent Change Detection

The separation between `plan` and `apply` phases creates a critical safety feature but also introduces a potential risk: **what if the database changes between planning and execution?** This time window could lead to applying outdated or conflicting migrations.

The tool solves this with **fingerprinting** - a cryptographic mechanism that ensures the exact database state you planned against is the same state you're applying changes to.

### How Fingerprinting Works

**During Plan Generation**: When you run `pgschema plan`, it calculates a cryptographic fingerprint of the current database schema state and embeds it in the plan file:

```json

{

"version": "1.0.0",

"pgschema_version": "1.0.0",

"created_at": "2025-08-12T17:44:43+08:00",

"source_fingerprint": {

"hash": "965b1131737c955e24c7f827c55bd78e4cb49a75adfd04229e0ba297376f5085"

},

"diffs": [...]

}

```

**During Apply Execution**: Before executing any changes, `pgschema apply` recalculates the current database fingerprint and compares it with the stored fingerprint from the plan.

### Safety in Action

This fingerprinting prevents dangerous scenarios:

```bash

# Team member A generates plan

pgschema plan \

--host prod.db.com \

--db myapp \

--user postgres \

--file schema.sql \

--output-json plan.json

# Time passes... Team member B makes emergency schema change

# Team member A tries to apply original plan

pgschema apply \

--host prod.db.com \

--db myapp \

--user postgres \

--plan plan.json

# ❌ Error: schema fingerprint mismatch detected - the database schema has changed since the plan was generated.

#

# schema fingerprint mismatch - expected: 965b1131737c955e, actual: abc123456789abcd

```

### Recover Fingerprint Mismatch

When a fingerprint mismatch occurs, the right approach is to reestablish the baseline with the current database state:

```bash

# 1. Get the latest schema from the current database

pgschema dump \

--host prod.db.com \

--db myapp \

--user postgres \

> current-schema.sql

# 2. Update your desired schema file with any new changes

# (merge your intended changes with the current state)